[100% Vaild] 100% Pass CompTIA 220-901 Dumps Practice Test Certification Exam Video With High Quality

Are you still distressed that you are young learner of CompTIA 220-901 dumps exam prep? “CompTIA A+ Certification Exam” is the name of CompTIA 220-901 exam dumps which covers all the knowledge points of the real CompTIA exam. 100% pass CompTIA 220-901 dumps practice test certification exam video wth high quality. Pass4itsure CompTIA 220-901 dumps exam questions answers are updated (119 Q&As) are verified by experts.

The associated certifications of 220-901 dumps is A+. CompTIA https://www.pass4itsure.com/220-901.html dumps exams questions answers of Pass4itsure is devoloped in accordance with the latest syllabus.

Exam Code: 220-901

Exam Name: CompTIA A+ Certification Exam

Q&As: 296

[100% Vaild CompTIA 220-901 Dumps From Google Drive]: https://drive.google.com/open?id=0BwxjZr-ZDwwWU3RVZHZ5YWNXQTQ

[100% Vaild CompTIA 220-801 Dumps From Google Drive]: https://drive.google.com/open?id=0BwxjZr-ZDwwWWGUzdnM3M2lHQTQ

Pass4itsure Latest and Most Accurate CompTIA 220-901 Dumps Exam Q&As:

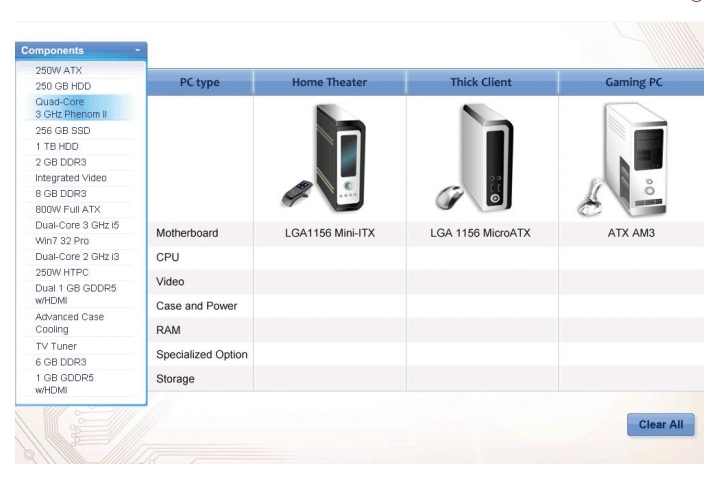

QUESTION 28

Drag the components from the list and place them in correct devices.

Select and Place:

220-901 exam Correct Answer:

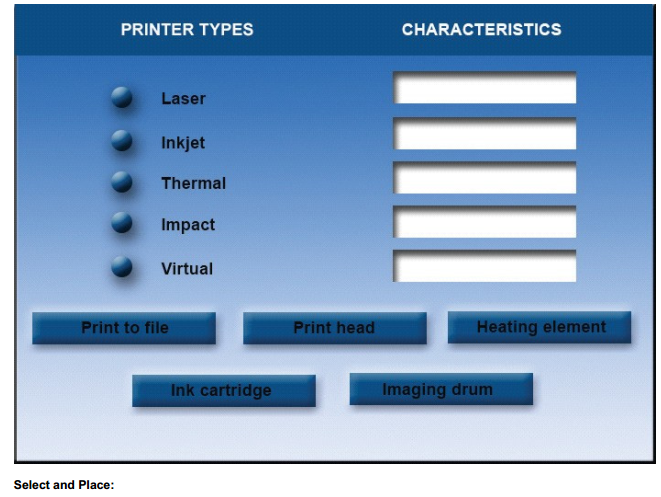

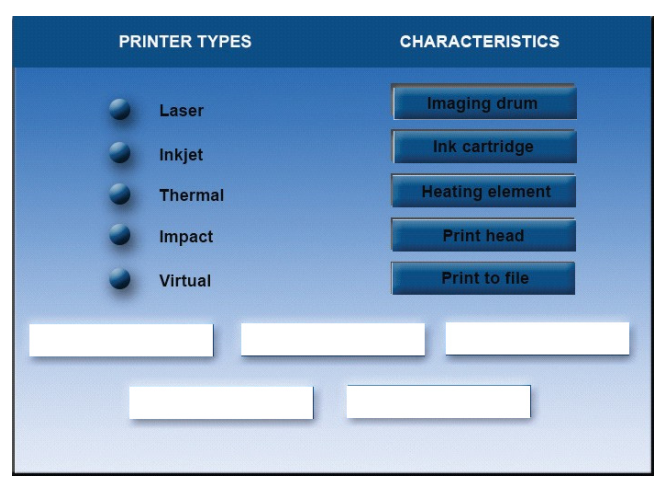

QUESTION 29

Drag and drop the characteristics to the correct printer types. Options may be used once.

QUESTION NO: 30

You have cluster running with the FIFO Scheduler enabled. You submit a large job A to the cluster, which you expect to run for one hour. Then, you submit job B to cluster, which you expect to run a couple of minutes only. You submit both jobs with the same priority. Which two best describes how the FIFO Scheduler arbitrates the cluster resources for a job and its tasks?

A. Given Jobs A and B submitted in that order, all tasks from job A are guaranteed to finish before all tasks from job B.

B. The order of execution of tasks within a job may vary.

C. Tasks are scheduled in the order of their jobs’ submission.

D. The FIFO Scheduler will give, on average, equal share of the cluster resources over the job lifecycle.

E. Because there is more then a single job on the cluster, the FIFO Scheduler will enforce a limit on the percentage of resources allocated to a particular job at any given time.

F. The FIFO Schedule will pass an exception back to the client when Job B is submitted, since all slots on the cluster are in use.

220-901 dumps Answer: A,C

FIFO (first-in first-out) scheduling treats a job’s importance relative to when it was submitted. The original scheduling algorithm that was integrated within the JobTracker was called FIFO. In FIFO scheduling, a JobTracker pulled jobs from a work queue, oldest job first. This schedule had no concept of the priority or size of the job, but the approach was simple to implement and efficient.

QUESTION NO: 31

On a cluster running MapReduce v1 (MRv1), a MapReduce job is given a directory of 10 plain text as its input directory. Each file is made up of 3 HDFS blocks. How many Mappers will run?

A. We cannot say; the number of Mappers is determined by the developer

B. 30

C. 10

D. 1

Answer: B

QUESTION NO: 32

Your developers request that you enable them to use Hive on your Hadoop cluster. What do install and/or configure?

A. Install the Hive interpreter on the client machines only, and configure a shared remote Hive Metastore.

B. Install the Hive Interpreter on the client machines and all the slave nodes, and configure a shared remote Hive Metastore.

C. Install the Hive interpreter on the master node running the JobTracker, and configure a shared remote Hive Metastore.

D. Install the Hive interpreter on the client machines and all nodes on the cluster

220-901 pdf Answer: A

The Hive Interpreter runs on a client machine.

QUESTION NO: 33

How must you format the underlying filesystem of your Hadoop cluster’s slave nodes running on Linux?

A. They may be formatted in nay Linux filesystem

B. They must be formatted as HDFS

C. They must be formatted as either ext3 or ext4

D. They must not be formatted – – HDFS will format the filesystem automatically

Answer: C

The Hadoop Distributed File System is platform independent and can function on top of any underlying file system and Operating System. Linux offers a variety of file system choices, each with caveats that have an impact on HDFS. As a general best practice, if you are mounting disks solely for Hadoop data, disable ‘noatime’. This speeds up reads for files. There are three Linux file system options that are popular to choose from:

Ext3

Ext4

XFS

Yahoo uses the ext3 file system for its Hadoop deployments. ext3 is also the default filesystem choice for many popular Linux OS flavours. Since HDFS on ext3 has been publicly tested on Yahoo’s cluster it makes for a safe choice for the underlying file system. ext4 is the successor to ext3. ext4 has better performance with large files. ext4 also introduced delayed allocation of data, which adds a bit more risk with unplanned server outages while decreasing fragmentation and improving performance. XFS offers better disk space utilization than ext3 and has much quicker disk formatting times than ext3. This means that it is quicker to get started with a data node using XFS. Reference: Hortonworks, Linux File Systems for HDFS

QUESTION NO: 34

Your cluster is running Map v1 (MRv1), with default replication set to 3, and a cluster blocks 64MB. Identify which best describes the file read process when a Client application connects into the cluster and requests a 50MB file?

A. The client queries the NameNode for the locations of the block, and reads all three copies. The first copy to complete transfer to the client is the one the client reads as part of Hadoop’s execution framework.

B. The client queries the NameNode for the locations of the block, and reads from the first location in the list of receives.

C. The client queries the NameNode for the locations of the block, and reads from a random location in the list it receives to eliminate network I/O loads by balancing which nodes it retrieves data from at any given time.

D. The client queries the NameNode and then retrieves the block from the nearest DataNode to the client and then passes that block back to the client.

220-901 vce Answer: D

At the same time, we also constantly upgrade our 220-901 dumps training materials. So our exam training materials is simulated with the https://www.pass4itsure.com/220-901.html dumps practical exam. So that the pass rate of Pass4itsure is very high.